How to download a complete website with its links (practical SEO guide) by a Linkbuilding professional

Many times we need to analyze websites offline: for audits, for benchmarking or simply saving relevant content. For that, downloading a complete web page—including all of its internal pages, links, images, and resources—can be key. Here's how to do it effectively, without losing the internal link structure and maintaining the integrity of the site. I also show you a way to analyze the links on a website without having to download it.

Low-code tools are going mainstream

Purus suspended the ornare non erat pellentesque arcu mi arcu eget tortor eu praesent curabitur porttitor ultrices sit sit amet purus urna enim eget. Habitant massa lectus tristique dictum lacus in bibendum. Velit ut Viverra Feugiat Dui Eu Nisl Sit Massa Viverra Sed Vitae Nec Sed. Never ornare consequat Massa sagittis pellentesque tincidunt vel lacus integer risu.

- Vitae et erat tincidunt sed orci eget egestas facilisation amet ornare

- Sollicitudin Integer Velit Aliquet Viverra Urna Orci Semper Velit Dolor Sit Amet

- Vitae quis ut luctus lobortis urna adipiscing bibendum

- Vitae quis ut luctus lobortis urna adipiscing bibendum

Multilingual NLP Will Grow

Mauris has arcus lectus congue. Sed eget semper mollis happy before. Congue risus vulputate neunc porttitor dignissim cursus viverra quis. Condimentum nisl ut sed diam lacus sed. Cursus hac massa amet cursus diam. Consequat Sodales Non Nulla Ac Id Bibendum Eu Justo Condimentum. Arcus elementum non suscipit amet vitae. Consectetur penatibus diam enim eget arcu et ut a congue arcu.

Combining supervised and unsupervised machine learning methods

Vitae Vitae Sollicitudin Diam Sede. Aliquam tellus libre a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

- Dolor duis Lorem enim Eu Turpis Potenti Nulla Laoreet Volutpat Semper Sed.

- Lorem a eget blandit ac neque amet amet non dapibus pulvinar.

- Pellentesque non integer ac id imperdiet blandit sit bibendum.

- Sit leo lorem elementum vitae faucibus quam feugiat hendrerit lectus.

Automating customer service: Tagging tickets and new era of chatbots

Vitae Vitae Sollicitudin Diam Sede. Aliquam tellus libre a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

“Nisi consectetur velit bibendum a convallis arcu morbi lectus aecenas ultrices massa vel ut ultricies lectus elit arcu non id mattis libre amet mattis congue ipsum nibh hate in lacinia non”

Detecting fake news and cyber-bullying

Nunc ut Facilisi Volutpat Neque Est Diam Id Sem Erat Aliquam Elementum Dolor Tortor Commodo et Massa Dictumst Egestas Tempor Duis Eget Odio Eu Egestas Nec Amet Suscipit Posuere Fames Ded Tortor Ac Ut Fermentum Odio ut Amet Urna Possuere Ligula Volutpat Cursus Enim Libero Pretium Faucibus Nunc Arcu Mauris Sceerisque Cursus Felis Arcu Sed Aenean Pharetra Vitae Suspended Aenean Pharetra Vitae Suspends Ac.

As I always say: “SEO starts with a good understanding of the structure of the site. And sometimes, that's better done offline.”

What is the purpose of downloading a complete web page?

Before getting into technical matters, let's look at some practical cases where this is useful:

- Perform offline technical audits.

- Make a quick backup of a small site.

- Analyze the internal link structure of competitors.

- Save content that could disappear from the original domain.

- Study how a site is built to replicate good practices.

Tools and methods for downloading a complete website

There are several ways to do this, from command-line tools to browser extensions. Here are the most effective ones:

A. Using wget (Linux/Mac/Windows — via WSL or Cygwin)

wget is a very powerful console tool for downloading files recursively. It's ideal for copying an entire site.

Basic example:

wget --mirror --convert-links --adjust-extension --page-requirements https://www.ejemplo.com

Explanation of the parameters:

- --mirror: Activate mirror mode, recursively downloading the entire site.

- --convert-links: Convert internal links to work locally.

- --adjust-extension: Adjust the URLs to have a .html extension.

- --page-requisites: Download all necessary resources (CSS, JS, images).

✅ SEO Tip: Use this tool to analyze the internal link structure of competitors. Once downloaded, you can use tools like Screaming Frog to map links and see successful internal linking patterns.

B. Using HTTrack (Windows/Linux)

HTTrack is a free application that allows you to download complete websites graphically. It's ideal if you're not familiar with the console.

Advantages:

- Intuitive interface.

- It supports incremental updates.

- Allows you to filter by file types.

Basic Use:

- You download and install HTTrack.

- You start a new project.

- Enter the URL of the site to download.

- You select the destination folder.

- Start the download!

C. Chrome Extension: Website Downloader

If you prefer something quick and simple, there are extensions like “Website Downloader” in Chrome.

It works like this:

- When you install it, you click on the icon while you are on the main page of the site.

- It generates a ZIP with all the HTML, CSS, JS structure and internal links.

Important note: It doesn't always deeply download the entire site, but it does provide a good surface image.

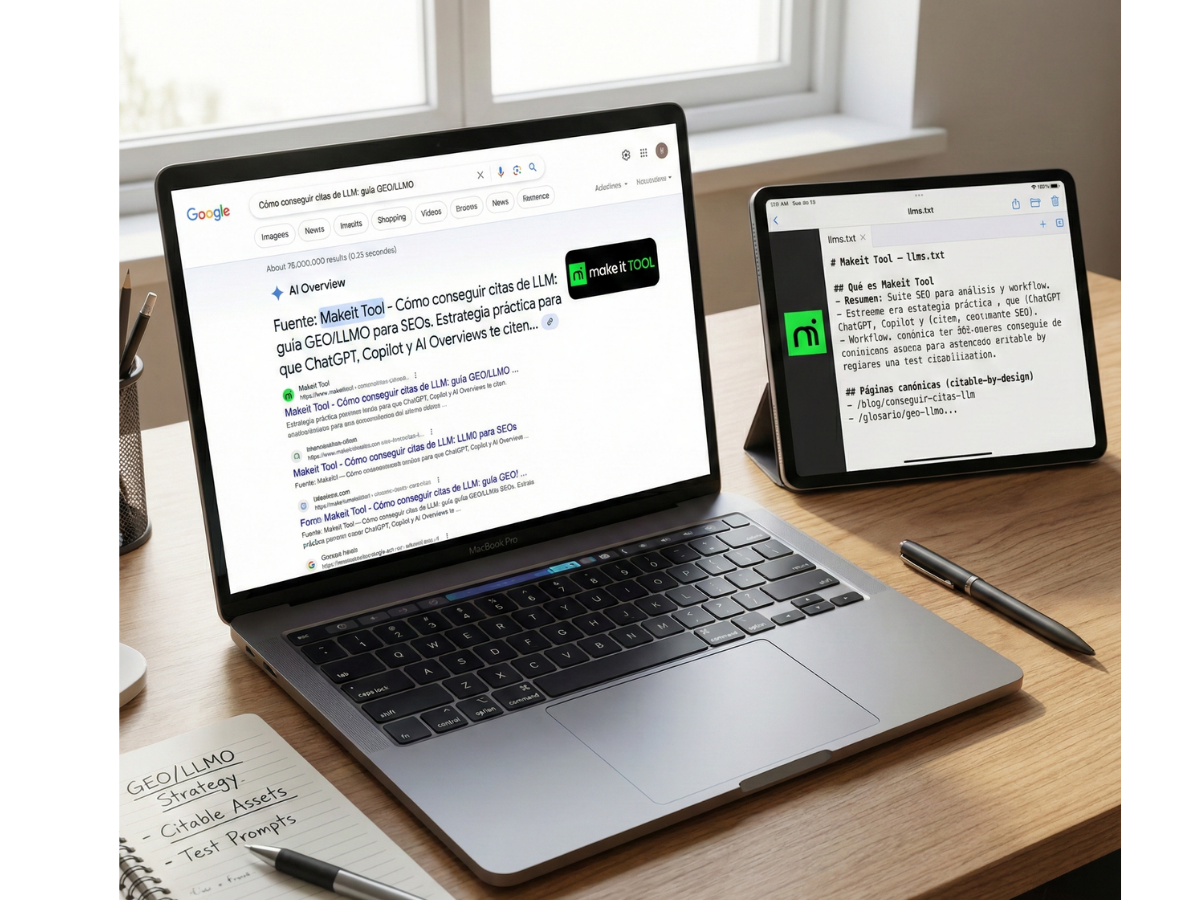

D. Another way to know the links on a website: MAKE IT TOOL

If your main objective is to know the links of a website, we recommend that you use MAKE IT TOOL, since with it you can see more than 25 data per link, which with a good working methodology allows you to:

- Create nourished link profiles knowing the link status, domain authority, location, TLD, strength, toxicity...

- You can make suggestions for link building campaigns

- You can direct the link to the verticals that the competition is working on and improve authority.

- You can see the evolution of a domain's link profile, allowing you to make much more informed decisions.

Technical Considerations and SEO

When downloading a website, you should keep in mind several important aspects:

🧩 Integrity of internal links

When you're working offline, relative links can break if they're not converted correctly. Tools like wget solve this with --convert-links.

⚠️ Robots.txt and access limits

Some sites block mass downloads using their robots.txt file. If you use wget, add the --ignore robots parameter only if you have explicit permission.

🔍 No automatic indexing

Once downloaded, the site won't be indexable by Google, obviously, but you can use it to study how it was structured before major changes.

My SEO advice based on real experience

For years I have used these types of techniques to do competitive benchmarking. My professional advice is:

“Keep an offline copy of your main competitors' sites at least once a quarter.”

This allows you to:

- Verify historical changes in content and links.

- Analyze how your SEO strategies are evolving.

- Identify link building opportunities based on links they already have.

You can automate this process with scripts that run wget periodically and keep an organized copy.

Downloading a complete web page with its links is a basic yet powerful skill for any SEO professional. Whether you're using wget, HTTrack, or a Chrome extension, the important thing is to make sure you preserve the internal link structure and essential resources.

analyze backlinks for free

UP TO 23 DATA PER LINK

Take advantage of all the resources we offer you to build an enriching link profile.