How to rank and be cited in Google AI Overviews (GEO + SEO)

There is no “button” to exit in AI Overviews. What does exist is a way to increase the likelihood that Google will include you as a citable source: solid technical SEO, easy-to-summarize content, trust signals (E-E-A-T) and a measurement system for learning and repeating. Google indicates that there are no special optimizations or additional requirements beyond good SEO practices, although eligibility depends on being indexed and being able to be displayed with a snippet.

Low-code tools are going mainstream

Purus suspended the ornare non erat pellentesque arcu mi arcu eget tortor eu praesent curabitur porttitor ultrices sit sit amet purus urna enim eget. Habitant massa lectus tristique dictum lacus in bibendum. Velit ut Viverra Feugiat Dui Eu Nisl Sit Massa Viverra Sed Vitae Nec Sed. Never ornare consequat Massa sagittis pellentesque tincidunt vel lacus integer risu.

- Vitae et erat tincidunt sed orci eget egestas facilisation amet ornare

- Sollicitudin Integer Velit Aliquet Viverra Urna Orci Semper Velit Dolor Sit Amet

- Vitae quis ut luctus lobortis urna adipiscing bibendum

- Vitae quis ut luctus lobortis urna adipiscing bibendum

Multilingual NLP Will Grow

Mauris has arcus lectus congue. Sed eget semper mollis happy before. Congue risus vulputate neunc porttitor dignissim cursus viverra quis. Condimentum nisl ut sed diam lacus sed. Cursus hac massa amet cursus diam. Consequat Sodales Non Nulla Ac Id Bibendum Eu Justo Condimentum. Arcus elementum non suscipit amet vitae. Consectetur penatibus diam enim eget arcu et ut a congue arcu.

Combining supervised and unsupervised machine learning methods

Vitae Vitae Sollicitudin Diam Sede. Aliquam tellus libre a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

- Dolor duis Lorem enim Eu Turpis Potenti Nulla Laoreet Volutpat Semper Sed.

- Lorem a eget blandit ac neque amet amet non dapibus pulvinar.

- Pellentesque non integer ac id imperdiet blandit sit bibendum.

- Sit leo lorem elementum vitae faucibus quam feugiat hendrerit lectus.

Automating customer service: Tagging tickets and new era of chatbots

Vitae Vitae Sollicitudin Diam Sede. Aliquam tellus libre a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

“Nisi consectetur velit bibendum a convallis arcu morbi lectus aecenas ultrices massa vel ut ultricies lectus elit arcu non id mattis libre amet mattis congue ipsum nibh hate in lacinia non”

Detecting fake news and cyber-bullying

Nunc ut Facilisi Volutpat Neque Est Diam Id Sem Erat Aliquam Elementum Dolor Tortor Commodo et Massa Dictumst Egestas Tempor Duis Eget Odio Eu Egestas Nec Amet Suscipit Posuere Fames Ded Tortor Ac Ut Fermentum Odio ut Amet Urna Possuere Ligula Volutpat Cursus Enim Libero Pretium Faucibus Nunc Arcu Mauris Sceerisque Cursus Felis Arcu Sed Aenean Pharetra Vitae Suspended Aenean Pharetra Vitae Suspends Ac.

In this guide, you'll learn how to:

- Detect What inquiries activate AI Overviews in your vertical and which ones don't deserve effort.

- Remove friction from indexing/tracking/snippets to enter the “pool” of candidates.

- Drafting blocks summarized and citable without “sounding like AI” or promotional copy.

- Implement practical E-E-A-T (authorship, evidence, own experience) and Scheme without promising miracles.

- Measure citations and source changes with a reproducible (and automatable) process.

What not It can be promised: to always appear, in all keywords, nor to improve CTR in a guaranteed way. Even if it meets requirements, Google recalls that index and serve content is not guaranteed.

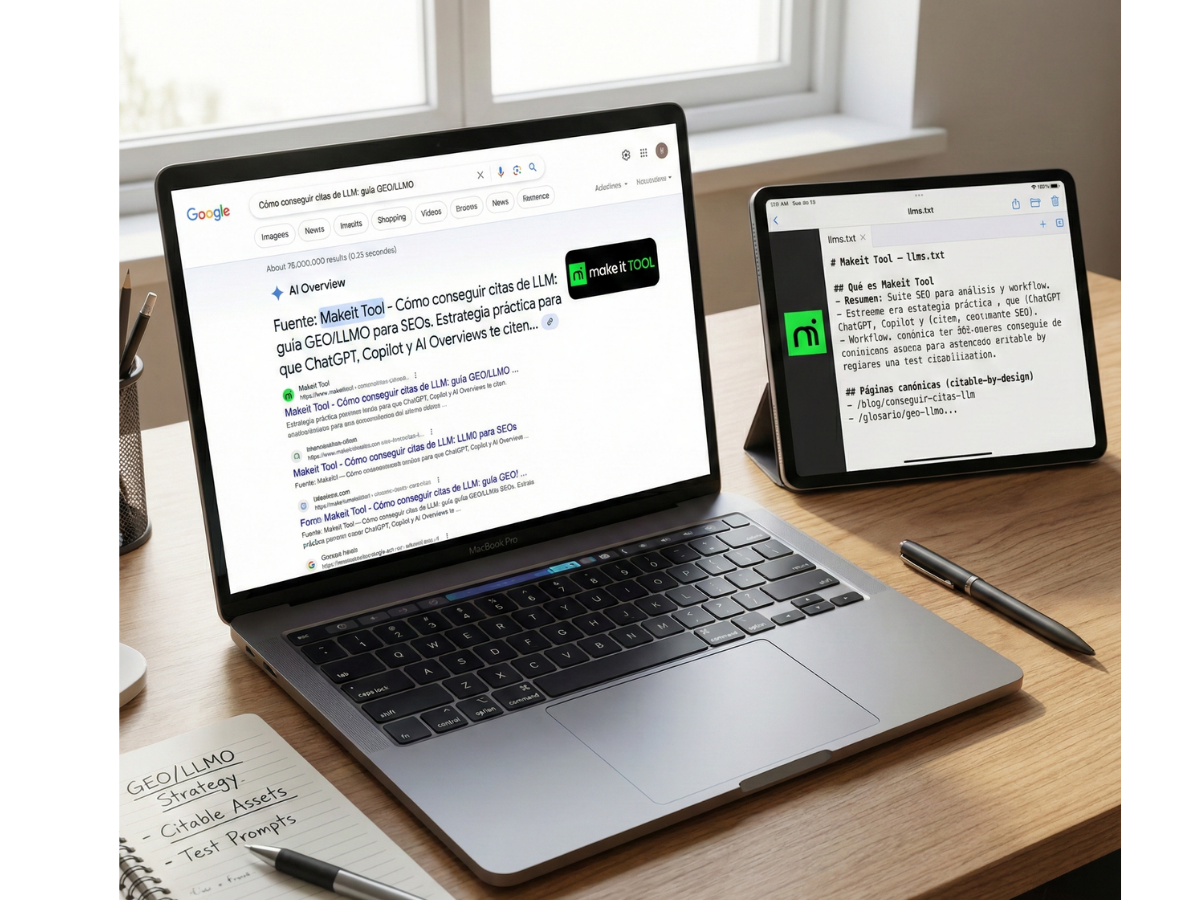

If you work with suite-type SEO tools, it's useful to have specific features for detect AI Overviews by keyword, Track appointments and compare mentioned competitors; this workflow can be done “by hand”, but it speeds up if you automate it (for example, with Makeit Tool as operational support within your stack).

What makes Google cite you in an AI Overview (without magic, with criteria)

AI Overviews are built by combining classic search systems with generative models. In the documentation for site owners, Google explains that these functions show relevant links and that they can use”Fan-out query” (multiple related searches) to cover sub-topics and find more support pages.

From an SEO perspective, your goal isn't to “optimize for AI” in the abstract, but to meet two conditions:

- Be eligible and competitive in Search

In order to appear as a support link, the page must be indexed and be eligible to show up in Google Search with Snippet (without locks). Google expresses this as a minimum requirement to be included as a supporting link in these experiences. - Be “citable” (removable, clear, verifiable)

When the system needs to back up an assertion, it tends to prefer fragments:

- with direct answers,

- structured by sub-questions,

- with neutral and demonstrable language,

- and with signs of reliability (authorship, sources, updating).

In parallel, Google describes that AI Overviews seeks to support what is presented with high-quality web results and links to go deeper; and raises the bar in YMYL queries.

What types of searches usually trigger AI Overviews and how to detect them

Without relying on “intuition”, there are often clear patterns where AI Overviews makes sense: complex questions, comparisons, “how” with several steps, decisions with trade-offs (“what is better”), and issues where the answer requires Synthesis from several sources. Google mentions that these experiences are useful especially for more complex questions or comparisons and that they are not always activated.

Practical method to detect and prioritize them:

- Review real SERPs of your keyword set (incognito mode + target location if applicable).

- Intentional label (informational, comparative, “how-to”, navigational, transactional).

- Group by clusters and mark where AI Overview appears repeatedly (AIO “stable”).

- Prioritize “where-to-win”: queries where you already have organic traction or thematic authority and can improve the citable block.

If you have a high volume, a system that detects AIO by keyword and saves captures/variants saves you time (and reduces sampling biases).

Being cited vs. ranking: how they are related (and why the strategy changes)

Many citations come from pages that are already strong in organic terms, because being well positioned usually correlates with joining the pool of candidates. Pero It's not the same metric: here you optimize for presence + attribution, not just for “X position”.

The strategy changes on three counts:

- Objective: “quote + qualified click”, not “guaranteed CTR”. Google indicates that traffic from these experiences is counted in Search Console under “Web”, but it doesn't promise a specific AIO report for you.

- contends: you prioritize removable blocks (definition, list, steps, table) within a complete and useful page.

- Measurement: you work with hypotheses and tests (controlled changes), because the SERP and the sources cited may vary.

Minimum requirements: indexing, crawling, and snippet eligibility

Before you “do GEO”, make sure that Google can:

- Trace the URL (robots.txt, WAF/CDN, blocks).

- Index (no accidental noindex, consistent canonicals, correct status).

- Show snippet (with no restrictions that prevent using text in results).

Google summarizes eligibility for AI features as “being indexed and eligible to be displayed with a snippet”, with no additional technical requirements.

Typical mistakes that leave you out even if the content is good:

- noindex by template or by a CMS plugin.

- Canonical pointing to another URL for parameterization/migration.

- Blocks due to robots.txt, firewall or CDN rules.

- Duplication (http/https, with/without www, final slash, parameters) with mixed signals.

- Main content “hidden” behind critical JS (Google can render a lot, but you shouldn't rely on it for the essentials).

Quick Audit (15 minutes): What to review before touching the content

- Answer 200 and indexable final version (without long chains of redirects).

- Actual indexing: URL inspection, coverage, and if the chosen canonical matches your intention.

- Canonical: consistent with sitemap, internal linking and preferred version.

- Sitemap: includes the correct URLs, without useless 404/redirect/parameterized.

- Internal linking: the page receives contextual links from relevant pages (not just from the footer).

- Reasonable CWV: you don't need “perfect”, but avoid broken or slow experiences that affect the user.

- Duplicates: accessible and linked variants (with/without parameters) without consolidation.

- Accessible content without relying on critical JS to display the main text.

Snippet control (nosnippet, max-snippet, data-nosnippet): when is appropriate and when not

These directives aren't “hacks”; they are editorial controls about how your text is displayed or reused in results.

- nosnippet: prevents displaying snippets (and, in practice, dramatically reduces the ability to use your content as a visible fragment).

- max-snippet: limits snippet length. It may reduce the likelihood of appearing in formats that need more text, but Google warns that It does not guarantee that certain formats disappear if there is no secure solution; for total blocking, nosnippet.

- data-nosnippet: Excludes only marked parts of the snippet. If it coexists with nosnippet, nosnippet prevails.

When it may be appropriate to:

- Protect sensitive fragments (variable prices, clauses, information that is misinterpreted out of context).

- Prevent a specific section from being cited if it tends to generate errors.

When is it usually a bad idea for GEO:

- If your goal is to be quoted, blocking snippets reduces the “material” that Google can use and attribute.

Design “summarizable” and citable content (without sounding like AI)

Here's the GEO core: making it easier for a summary system to find complete and verifiable answers without having to infer too much. It's not about writing “for robots”, but about writing Like a teacher: first the key idea, then the context and the nuances.

Patterns that tend to increase citability:

- Definitions in 1—2 sentences.

- Numbered steps when there is a real process.

- Mini-summaries at the beginning of each section.

- Neutral language (no self-promotion, no superlatives, no promises).

- Concrete examples (what to change, where, how to measure).

Open each section with a direct answer (2—3 sentences) and then expand

Example 1 (framework + condition):

“To be cited in AI Overviews, you need to be eligible for Search (indexed and with a snippet) and, in addition, provide clear and verifiable response blocks. If one of those two pillars fails, your chance of citation drops even if the content is long.”

Example 2 (what to do + why + when):

“Start each H2 with 2—3 sentences that answer the sub-question and add the 'why'. It works best in comparative queries or 'how' because AI usually synthesizes decisions and steps.”

Example 3 (limits):

“The schema can help with semantic coherence, but it doesn't guarantee being cited. Use it to describe what is already visible and true on the page, not as a visibility lever.”

Headers that match real sub-questions (query fan-out) without redundancies

If AI Overviews can trigger related searches (“fan-out”), you should anticipate real sub-questions, without repeating the obvious for an SEO audience. Instead of chaining “What is...?” generic, use headers that attack micro-intentions:

- “How to detect if a keyword triggers AI Overviews”

- “What requirements leave you out even if you rank”

- “Checklist of citable blocks”

- “Errors when using schema in SEO content”

- “How to measure impact without false attribution”

The rule of thumb: Every H3 must answer something that someone would write as is on Google or I would ask as a work question.

Signs of trust (E-E-A-T) that increase the likelihood of dating

At AI Overviews, “being useful” isn't enough: you're competing to be a reliable source. Google points out that it integrates its quality systems and that it raises the bar in queries where the quality of information is critical.

Quick wins for nichers and a process approach for managers can coexist:

- Quick wins: real signature, bio, references, update.

- Process: editorial policies, periodic review, change registration, ownership by topic.

Authorship and Editorial Responsibility: Signature, Bio and Policy Page

Specific elements that usually increase credibility:

- Signature with real name (or editorial team) and role.

- Short bio: relevant experience (years, verticals, type of projects), and what part of the content it covers.

- Editorial policies (a linkable page): how it is revised, how it is corrected, how it is updated.

- Corrections Policy: what to do if there are errors, how do you record changes.

This doesn't “force” Google to quote you, but it reduces trust friction and facilitates human and algorithmic evaluation.

Evidence and references: how to cite sources without converting it into a paper

Good references in SEO are not an infinite list: they are 3—6 sources that support the key points, for example:

- Official documentation (Search Central) about AI features, snippets, policies and practices.

- Studies with clear methodology (sample, period, how they measured), avoiding “as they say”.

Example of a supportable claim: Google states that there is no special optimization for AI Overviews other than SEO and usual technical requirements.

Signs of our own experience: examples, screenshots, tests and “what we saw when measuring”

To get out of generic content, provide operational evidence:

- SERP captures (with date) showing AIO and the sources cited.

- “Before/After” with a single change (e.g., add direct answers + checklist).

- Metrics: impressions/clicks per query and page, and annotation of when the change was made.

Suggested mini-experiment (easy and honest):

- Select 10 URLs with similar intent.

- Apply the “direct answer + extractable list” pattern only to 5 (test group).

- Keep 2—4 weeks (or the typical crawl cycle for your site).

- Compare changes by Queries/URLs and see if citations or AIO sources change in SERP.

Structured data and entities: helps Google understand your content (without promises)

The schema can help: understanding and consistency, but it's not a mechanism to “force” AI Overviews. Google points out that you don't need a special schema to appear in these functions and that the usual SEO practices continue to apply.

In addition, Google itself recalls that using structured data Enable eligibility, but It does not guarantee that a function appears, and that the markup must represent the main and visible content.

What types of schemas usually fit into SEO content (and when to use them)

Simple decision map (only if it matches the actual content):

- Article/BlogPosting: for editorial articles (most common).

- FAQPage: only if there is a real FAQ block on the page and the questions/answers are visible.

- HowTo: only if it's an authentic step by step (not “loose tips”).

- Organization: to reinforce the entity of the site (consistent data).

- Person (author): if the authorship is real and visible.

- Software Application: if you describe a tool/software in an informative way (features, requirements, use cases), maintaining consistency with the visible content.

Common schema errors that harm you (spam, inconsistent markup, invisible content)

Typical anti-patterns (and how to audit them):

- Mark Invisible content or different from the user's text (risk of disqualification).

- Mark things irrelevant or misleading (false reviews, claims not present).

- Inconsistencies between duplicate pages (different schema for the same canonical page).

- Lack of required properties (not eligible for rich results).

- Using schema as a “decoration” without providing real understanding.

Quick Audit:

- Rich Results Test + validation that the markup reflects exactly what is visible.

- Manual sampling: Open rendered HTML and compare with JSON-LD.

- Duplicate review: same entities, same base data.

SERP-first strategy: enter the “pool” of candidates before thinking about AIO

AI Overviews doesn't replace SEO: it extends it. Google insists that the best SEO practices are still relevant and that to appear as a supporting link you need to meet technical requirements and be eligible for Search.

Realistic ROI plan:

- gain positions where you are already close,

- convert those URLs into “citable blocks”,

- measure and iterate.

Prioritize opportunities: where you already rank and where there is stable AIO

Prioritization method (fast and actionable):

- Filter keywords in positions 4—20 (close to the first competitive page).

- Stay with intention informational or comparative.

- Confirm that there are AIO present with some stability (not just one day).

- Observe if the Same sources are repeated: it usually indicates a pattern of “citable” content.

Reverse-engineering of cited sources: what they cover, how they structure it and what is missing

Replicable process:

- It extracts the cited sources and captures their structure (H2/H3, tables, lists).

- List subtopics and definitions that repeat.

- Detect gaps: what nobody explain well (measurement, limits, errors, checklist).

- Provide something new: measurable example, useful table, operational checklist, clearer definition or recent update.

The key is not “copy”, but improve utility + clarity + verifiability.

Measurement: How to know if you're winning appointments and if it compensates you

There isn't always a perfect fact. Google indicates that traffic from AI features is included in Search Console within “Web” and suggests that you also rely on behavioral analytics/conversions; even so, you should not attribute causation without testing.

In practice, it measures with a combination of:

- Search Console (Queries/URLs),

- SERP tracking (presence of AIO + cited sources),

- onsite analytics (click quality).

What to look at in Search Console when there are AI Overviews in your vertical

Recommended approach:

- Analyze by page (target URLs) and by querying (clusters).

- Segment by intention (comparative vs informative vs “how-to”).

- Note dates of changes (content, internal link, schema, update).

- See trends, not individual days: impressions, clicks, average position and changes by cluster.

Avoid quick “AIO took clicks away” conclusions without comparing:

- equivalent periods,

- simultaneous SERP changes,

- and a control group if possible.

SERP tracking and alerts: detect when you are cited and when sources change

What to capture (minimum feasible):

- SERP by keyword (with date).

- If there is AIO, what sources do you cite and in what order.

- Changes to cited sources (inputs/outputs) after your optimizations.

How to turn it into a process:

- Weekly monitoring for your prioritized set.

- Alerts when cited sources change or AIO appears or disappears.

- Record of hypotheses and associated changes.

Makeit Tool-like support fits here: AIO detection by keyword, citation tracking and comparison with cited competitors so as not to rely on manual reviews.

Controlled Experiments: How to Test Changes Without Deceiving Yourself

Simple framework:

- Hypothesis: “If I open each section with 2—3 direct sentences and add a checklist, I'll increase citability in X queries.”

- Single change: Edit only one element per iteration.

- Period: Define window (e.g., 2—6 weeks depending on tracking/competence).

- Outturn: check SERP (citations), GSC (Queries/URLs) and onsite (quality).

- Decision: Scale, adjust, or reverse.

Practical workflow with Makeit Tool (SEMrush type) to accelerate GEO

This flow can be executed with any stack. The difference between a tool is to reduce friction: fewer manual steps, more consistent tracking.

Discover opportunities: keywords with AIO + intention + real difficulty

Replicable workflow:

- List target keywords and mark which ones have AIO.

- Cross with intention (informative/comparative) and with close ranking.

- Evaluate cited competence: if the same sources always appear, study the pattern and look for the gap (format, evidence, freshness, clarity).

With a tool: you filter directly by “keyword with AIO”, estimate opportunities and export a prioritized backlog.

Audit of “citable blocks”: structure, clarity, EEAT and schema in a checklist

Operational checklist by URL:

- Structure: H2/H3 answer real sub-questions.

- Opening: 2—3 direct sentences per section.

- Format: At least one useful table/list/checklist.

- EEAT: author + bio + revision date + linkable editorial policy.

- Evidence: 3—6 key references (prioritize official documents).

- Schema: matches visible text, without overmarking.

- Snippets: There are no involuntary restrictions.

With a tool: you validate in a systematic way (and reduce forgetfulness), but without promising that “by passing the checklist” you will be quoted.

Monitor cited competitors: what they publish, how they update and what signals they repeat

Continuous process:

- Identify domains that are cited repeatedly in your cluster.

- Record your pattern: formats, useful length (not total), test types, update, entities.

- Convert patterns into backlogs: “add comparison table”, “improve definitions”, “update with dates”, “include mini-experiment”.

With automated monitoring, you detect changes in cited sources and react sooner.

Mistakes that lower your chances of showing up in AI Overviews

The anti-patterns that most harm citability tend to coincide with what Google pursues as low quality or manipulation: undifferentiated content, unsupported claims and worthless scaling.

Google maintains that using automation (including AI) to generate content for the main purpose of manipulating rankings violates its spam policies, and warns of the risk of “scaled content abuse” when many pages are created without adding value.

Scaling content without adding value (and how to avoid it)

Typical signs:

- “Thin” pages that repeat the same thing with minimal variations.

- Generic summaries without own experience or examples.

- Semantic duplication between URLs in the same cluster.

How to mitigate it:

- Consolidate: fewer URLs, more complete and useful.

- Update: add evidence, measurable examples, tables/checklists.

- Differentiate: it incorporates an operational point of view (“how do we measure it”, “what went wrong”, “what trade-offs are there”).

Promises, promotional tone and forced “optimization”

Phrases to avoid (and neutral rewriting):

- “We guarantee you'll be listed in AI Overviews” → “You can increase the likelihood with technical requirements, citable content and measurement, without guarantees.”

- “The Best Method” → “A practical and reproducible method that usually works in comparative queries.”

- “You'll double your traffic” → “You can change the mix of impressions and clicks; measure by cluster and decide based on traffic quality.”

The promotional tone reduces trust and, in summary, tends to be less “quotable” because it is not verifiable.

Obsolete content: maintenance plan to remain citable

On topics that change quickly (SEO, SERPs, features), maintenance is part of positioning:

- Review Quarterly or biannual according to the volatility of the vertical.

- Change log: what was updated and when.

- Audit of links and sources: replace expired references.

- Revalidation of snippets and schema after CMS/template changes.

FAQs on how to rank in Google's AI Overviews

Can it be directly “optimized” for AI Overviews or is it just SEO as always?

There is no single lever. Google indicates that the best SEO practices continue to apply and that there are no special requirements or optimizations to appear in AI Overviews; the essential thing is to be indexed, to be eligible for snippets and to provide useful, clear and reliable content. The difference is in structuring it to be easily quotable.

Does the schema guarantee to be cited in an AI Overview?

No. Structured markup can help Google understand and represent your content, but it doesn't ensure that you appear in a specific function. Google points out that you don't need a special schema for AI features and that, in general, structured data enables eligibility, it doesn't guarantee the appearance. Use it only if it matches the visible text.

What weighs more: being in the top 10 or having a Q&A/Checklist format?

You need both things in balance. Being well-positioned increases the likelihood of entering the pool of candidates, but the format influences your being “extractable” and citable. Prioritize keywords where you're already close (for example, positions 4—20) and improve clarity: direct answers, useful lists, and evidence, without promising CTR effects.

How do nosnippet and max-snippet affect AI Overviews?

These are editorial controls with trade-offs. nosnippet blocks snippets and reduces reusable content in results. max-snippet limits length, but Google warns that it does not guarantee to eliminate certain formats; for total blocking, nosnippet. data-nosnippet excludes specific fragments, and if it coexists with nosnippet, nosnippet prevails.

How do I measure if an AI Overview is taking away clicks (or bringing better ones)?

It measures by clusters and comparable periods. Use Search Console for queries and pages, annotate dates of changes and cross-check with SERP tracking (if there is AIO and what sources it cites). Complete with onsite analytics to evaluate quality (time, conversions). Avoid attributing causality to AIO without a test or without controlling simultaneous changes.

How long does it take to notice an optimization for appointments in AIO?

It depends on crawling, reindexing, competition, and SERP stability. On sites with a good crawl budget and clear changes, it can be noticed within weeks; on large sites or in very competitive clusters, it can take longer and be intermittent. Work with assumptions, unique changes and defined windows, because AI Overviews isn't always active.

What do I do if an AI Overview cites my brand with incorrect or outdated information?

First, correct and clarify your source: update the content, add dates, define terms and eliminate ambiguities. Reinforce entity signals (author, references, editorial policies) and monitor the SERP to see if citations or summarized text change. There is no total control over the summary, but you can improve the quality and timeliness of the page that Google takes as support.

(Extra) Does Google “penalize” content made with AI if it's of quality?

Google doesn't prohibit the use of AI by default, but it warns that using automation to generate content for the primary purpose of manipulating rankings violates spam policies, and that worthless scaling can be considered abuse. The practical key is human oversight, originality, evidence and real utility, not volume.

(Extra) Is there a way to “force” Google not to use parts of my text in summaries?

You can limit snippets with directives such as nosnippet, max-snippet and data-nosnippet, but the cost is usually a loss of visibility and citation possibilities. nosnippet is the most restrictive option; data-nosnippet allows you to exclude specific sections. Think of them as editorial decisions, not as positioning tactics.

analyze backlinks for free

UP TO 23 DATA PER LINK

Take advantage of all the resources we offer you to build an enriching link profile.