The impact of AI on SEO: what changes and how to adapt

AI doesn't “kill” SEO, but it does change how attention is distributed on Google, when the click occurs, and what quality standard separates useful content from generic content. In 2026, the key question is no longer just “what position am I in?” , but “is my content chosen as a reference, cited, and converted when the user really needs to go deeper?”

Low-code tools are going mainstream

Purus suspended the ornare non erat pellentesque arcu mi arcu eget tortor eu praesent curabitur porttitor ultrices sit sit amet purus urna enim eget. Habitant massa lectus tristique dictum lacus in bibendum. Velit ut Viverra Feugiat Dui Eu Nisl Sit Massa Viverra Sed Vitae Nec Sed. Never ornare consequat Massa sagittis pellentesque tincidunt vel lacus integer risu.

- Vitae et erat tincidunt sed orci eget egestas facilisation amet ornare

- Sollicitudin Integer Velit Aliquet Viverra Urna Orci Semper Velit Dolor Sit Amet

- Vitae quis ut luctus lobortis urna adipiscing bibendum

- Vitae quis ut luctus lobortis urna adipiscing bibendum

Multilingual NLP Will Grow

Mauris has arcus lectus congue. Sed eget semper mollis happy before. Congue risus vulputate neunc porttitor dignissim cursus viverra quis. Condimentum nisl ut sed diam lacus sed. Cursus hac massa amet cursus diam. Consequat Sodales Non Nulla Ac Id Bibendum Eu Justo Condimentum. Arcus elementum non suscipit amet vitae. Consectetur penatibus diam enim eget arcu et ut a congue arcu.

Combining supervised and unsupervised machine learning methods

Vitae Vitae Sollicitudin Diam Sede. Aliquam tellus libre a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

- Dolor duis Lorem enim Eu Turpis Potenti Nulla Laoreet Volutpat Semper Sed.

- Lorem a eget blandit ac neque amet amet non dapibus pulvinar.

- Pellentesque non integer ac id imperdiet blandit sit bibendum.

- Sit leo lorem elementum vitae faucibus quam feugiat hendrerit lectus.

Automating customer service: Tagging tickets and new era of chatbots

Vitae Vitae Sollicitudin Diam Sede. Aliquam tellus libre a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

“Nisi consectetur velit bibendum a convallis arcu morbi lectus aecenas ultrices massa vel ut ultricies lectus elit arcu non id mattis libre amet mattis congue ipsum nibh hate in lacinia non”

Detecting fake news and cyber-bullying

Nunc ut Facilisi Volutpat Neque Est Diam Id Sem Erat Aliquam Elementum Dolor Tortor Commodo et Massa Dictumst Egestas Tempor Duis Eget Odio Eu Egestas Nec Amet Suscipit Posuere Fames Ded Tortor Ac Ut Fermentum Odio ut Amet Urna Possuere Ligula Volutpat Cursus Enim Libero Pretium Faucibus Nunc Arcu Mauris Sceerisque Cursus Felis Arcu Sed Aenean Pharetra Vitae Suspended Aenean Pharetra Vitae Suspends Ac.

AI isn't ending SEO: it's changing the rules of the game. In 2026, SERPs with generative responses redistribute clicks, raise the quality standard and force us to move from “ranking keywords” to building measurable utility and trust: judicious content, citable structure, signs of authority and a measurement by intention, cluster and business. Whoever understands this change and translates it into processes (updating, verification, governance and data-based prioritization) not only adapts, but also competes better in an environment where being chosen and cited weighs as much as position.

This forces you to adjust three practical decisions:

- What to produce and what to update: fewer “loose items” and more parts with judgment, examples and maintenance.

- How to measure impact: less obsession with isolated keywords and more reading by intention, cluster and page.

- How to Build Trust: demonstrable utility, authorship, precision and clear limits (especially in YMYL verticals).

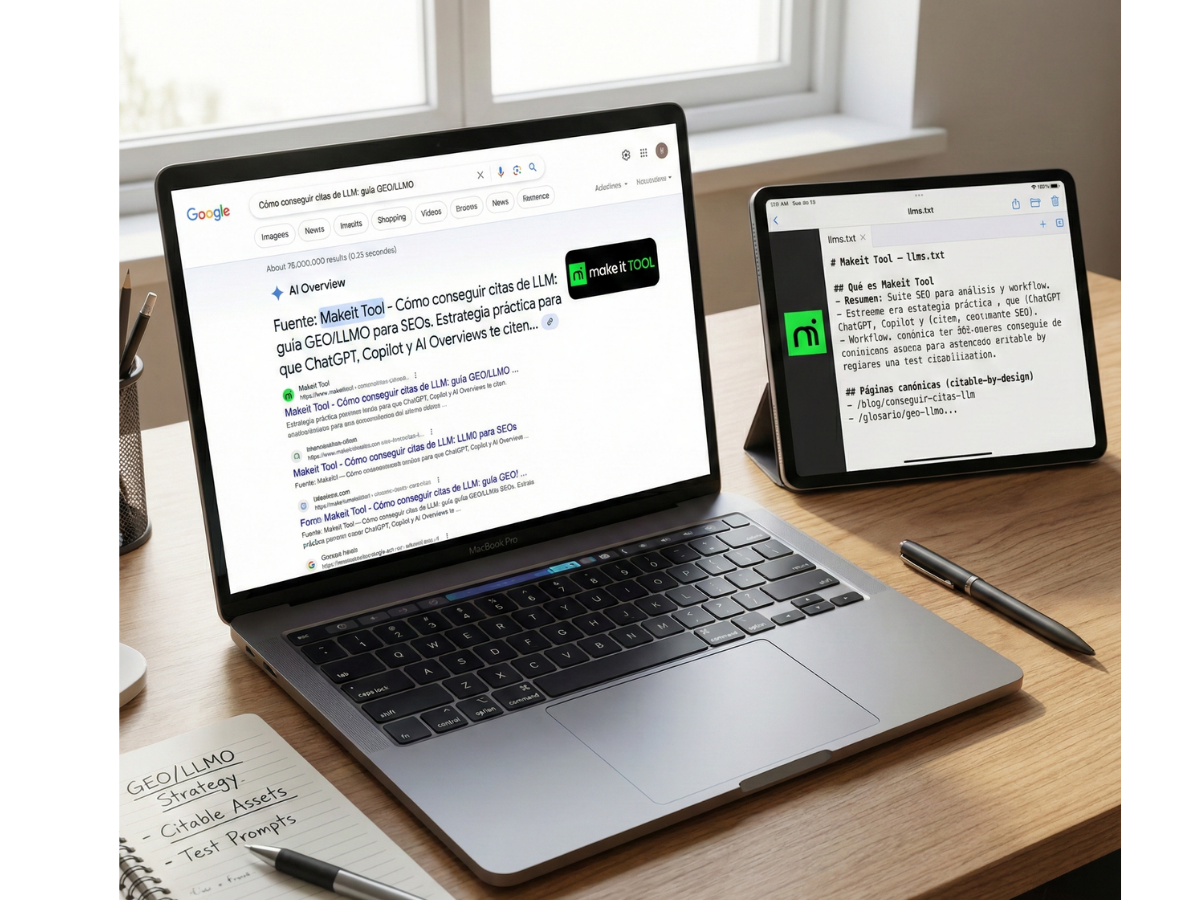

In this context, a SEMRush type suite such as Makeit Tool It can help you Investigate demand, monitor SERP changes, prioritize backlog and Follow the contestants with an operational approach (without converting the strategy to “publish more to publish”).

The new SERP: generative responses, support links and “query fan-out”

The typical experiences AI Overviews/AI Mode they tend to solve part of the “informational work” within the SERP: the user gets a summary, quick comparisons or main steps without accessing a website. The typical effect is that Lower the number of clicks in simple informational searches, but the clicks that remain can be most qualified: users arrive who have already filtered and need details, validation, examples or decisions.

What's important for strategy: these layers of AI can Rebuild demand. The same search can open a “path” of sub-questions and refinements, and your content competes not only to “rank”, but to be support material (quoted) and by capture the click when there is an intention to go deeper.

Expectations should also be aligned: Google has insisted that there are no “special” optimizations to appear in these experiences beyond doing the basics right: useful, crawlable, indexable, clear content, and with signs of trust.

What does it mean for Google to do a “fan-out query” (and why does keyword research change)

“Query fan-out” can be understood as the process by which the engine expand a query in a set of related subqueries: definitions, nuances, comparisons, use cases, pros and cons, requirements, risks, alternatives and steps. If your content is designed only for an exact keyword (“narrow” content), you're more likely to fall short of a SERP that already offers a multi-angle summary.

Practical actions to adapt keyword research and content:

- It covers real subtopics, not empty variants: Instead of creating 10 URLs for synonyms, create a solid piece that answers meaningful sub-questions.

- Solve “layered” questions: first a short and direct answer; then context, criteria and examples.

- Write self-explanatory sections: Each block should be readable independently (useful for AI, snippets and users scanning).

- Reinforces semantic consistency: use clear entities (concepts, tools, metrics), stable and comparative definitions with consistent terms.

- Includes trade-offs: what works, when it doesn't, limits and cases where another solution is appropriate.

Expected result: less dependence on “a keyword = a URL” and more focus on Cluster (main topic + sub-topics that the user really needs to decide).

Visibility is no longer just “position”: now it matters to be quoted and to be chosen

In 2026, “visibility” is separated into three levels that should be measured separately:

- Rankear: be in the traditional result set.

- Be a candidate for an appointment: have content that AI can extract with confidence (clear blocks, verifiable data, definitions, structure).

- Get a qualified click: Capturing the user when they need depth, verification or making a decision.

There's no universal impact figure (it depends on vertical, intent, brand and SERP), so the sensible approach is to work with hypotheses and tests: identifies what type of query is “absorbing” responses in the SERP, and where the user keeps clicking out of necessity.

Impact on traffic and business: fewer clicks, different clicks and new attributions

The question “is my traffic going to fall?” it's legitimate, but the useful answer is: Depends on what type of traffic And of What role does that page play in your funnel.

- In Informational TOFU (definitions, basic concepts), a part of the value is more likely to remain in the SERP.

- In MOFU/BOFU (comparisons, implementation, selection, pricing, risk, requirements), the user usually needs details and validation: here judicious content can gain importance.

In addition, AI features are mixed into the experience: that's why it's convenient measure by clusters and pages, not just because of single keywords. And, according to Google, the performance associated with these experiences is reflected in global Search Console data (without assuming a separate report), requiring a more “analytical” measurement, not just reports.

What types of content tend to lose the most value (and how to convert them)

Content patterns that tend to depreciate when the SERP summarizes:

- Basic definitions without their own contribution.

- Generic listings (“the 10 advantages of...”) with repeated ideas.

- Wikipedia-like content: correct but interchangeable.

- Superficial how-to no examples, no common errors, no context.

How to convert them to regain utility:

- Criteria guides: not just “what is it”, but “when is appropriate”, “how to choose”, “what to avoid”.

- Comparisons with trade-offs: explain real pros/cons, not marketing.

- Own examples: cases, mini-audits, analysis fragments, reasoned decisions.

- Templates and checklists: artifacts that the user can use (and that are not completely “consumed” in a summary).

- Visible update: incorporate recent changes and explain what has changed and why.

This gives you a prioritization criterion: before you post more, Improve what already captures demand and is more likely to “be summarized” by the SERP.

Where opportunities appear: complex long-tail, comparisons and multi-step searches

In a world of summarized answers, what helps to decide and perform. Examples of typical opportunities:

- Long-tail complex: queries with conditions (“for e-commerce with X”, “if I have Y limitation”, “with budget Z”).

- Comparisons: “A vs B” with criteria, not just characteristics.

- Multistep searches: when the user needs a method (diagnosis, prioritization, roadmap, measurement).

- Implementation content: steps, QA, common errors, examples.

The idea is not to pursue “rare keywords”, but to build pieces that respond to a user who You are already in the problem and it needs a framework to move forward.

The bar is rising: Google rewards useful, reliable and “people-first” content (with or without AI)

AI has made publishing cheaper. When producing text is easy, the difference is not in “having content”, but in have content that deserves trust and use. That's why the “people-first” approach fits: pages created to help the user, not to fill keyword gaps.

A practical framework for evaluating quality is to think about Who/How/Why:

- Who: who wrote it and why is competent for that topic.

- How: how it was created (with what process, verification, sources, review).

- Why: why it exists (solving a real need, not just capturing traffic).

You don't need to turn this into a manifest. All you need is for the content to demonstrate consistency: precision, limits, examples, and maintenance.

Differential value signals: own experience, data, examples and clear limits

Auditable signals (“proof of work”) that usually increase utility:

- Screenshots and evidence: SERPs, GSC, observed patterns (with date).

- Mini-experiments: what you changed, what you measured, what you learned (without promising replicability).

- Results with context: not “we went up 200%”, but “on this type of page, with this change, we saw X”.

- Templates and checklists: to apply the method.

- Before/After: structure, coverage, snippet, intent.

- Explicit limits: what the content doesn't cover and when to seek expert support.

These signals don't “guarantee” anything, but they do build an asset that is difficult to clone.

Update and maintenance: SEO is more like a product than “single items”

It's increasingly like a product approach: you have pages that play a role, compete, age, and require iteration.

Recommended routine (especially useful for managers):

- Review by cluster (not by isolated URL): which pieces cover which subintentions.

- Refresh key sections: definitions, criteria, tools, examples.

- Keep “last update” when there are real changes.

- Annotate changes (what was changed and when) to be able to correlate with data.

- Recircular internal link: reinforce the paths to pages that convert.

AI to produce content: accelerator if there is control, risk if there is worthless scaling

Using AI in content can be a reasonable accelerator for:

- ideation and structure,

- initial drafts,

- reorganization,

- helps with variations of examples,

- QA support (detect gaps, duplicates, inconsistencies).

The main risk is not “using AI”, but Scale worthless volume: publish a lot of generic, repetitive pages or pages with unverified statements. At that point, you enter patterns compatible with spam or scaled content abuse, and you also deteriorate trust and reputation.

Recommended pipeline (human + AI): briefing → draft → verification → editing → QA SEO

A repeatable flow for niche or equipment:

- Briefing: intention, audience, what decisions the piece should enable, what not to cover.

- Eraser (with AI if appropriate): structure + base answers, without asserting unverified data.

- Verification (required): review claims, dates, definitions, and remove “convincing filling”.

- Human edition: add criteria, own examples, trade-offs, limits.

- TO SEO: intent, internal linking, readability, cannibalization, coherent meta, schema if applicable.

Rule of thumb: If a block doesn't provide something that a SERP summary wouldn't, it should be rewritten or deleted.

Minimum editorial policy for teams: what is allowed, what is reviewed and what is never published

A simple operating standard reduces risks:

It is allowed

- AI for structure, drafts, internal summaries and reorganization.

It is always checked

- Data, statistics and “trends”: source and date.

- Causal statements (“this improves X”): nuance and condition.

- Tone: Avoid promises and promotional language.

- Consistency: definitions, entities, criteria repeated throughout the content.

It is never published

- Statistics without a verifiable source.

- Invented cases or false captures.

- Duplicate/paraphrased content without input.

- Irresponsible recommendations on YMYL (health/finance/legal) issues without adequate review.

“GEO/LLMO”: optimize to be understood and quoted, not to sound robotic

If the SERP synthesizes, your content should be removable (easy to summarize without losing precision) and attributable (of course who says so, on what basis). It's not about “writing for robots”, but about writing with structure and clarity for humans... which also works well when a system needs to select support.

Again, without looking for shortcuts: Google doesn't ask for special optimizations; what works is SEO base + useful and reliable content.

“Citable blocks”: how to write sections that survive the summary

Recommended pattern for each important section:

- Direct answer in 2—3 sentences (definition, criterion or general recommendation).

- Development with nuances, limits and examples.

- Numbered steps when there is a process.

- Short glossary if there is jargon.

This increases the likelihood that a block will be chosen as a support because it is compact, precise and does not depend on the rest of the text to be understood.

Formats that best transfer value: decision tables, checklists and examples

One way to turn this post into a practical reference is to leave reusable tools. For example:

“Savable” checklist for on-page citables:

- Definition or direct answer at the beginning of the block.

- Criteria and trade-offs (when yes/when not).

- Applicable example (even if it's simple).

- Update and date when the topic evolves.

- Internal link to implementation/decision.

Technical SEO in the AI era: indexing, rendering and snippet control

You can have the best content in the world and still be left out due to technical failures. In the AI era, the technical minimum remains the same, but the cost of error is higher: if you're not eligible for indexing or snippet, you're not competing for traditional results or for being cited support.

In addition, there are preview controls (nosnippet, max-snippet, data-nosnippet, noindex) that are real editorial levers, but with Trade-offs: Limiting extraction can protect content, but it can also reduce visibility.

Eligibility: indexed + snippet (without that, there's no game)

Quick eligibility checklist:

- Sin Noindex accidental in templates or sections.

- Canonical coherent (avoid self-cannibalization by parameters/duplicates).

- No blockages for robots.txt of critical resources.

- Main content accessible at HTML (or consistent rendering if it depends on JS).

- Internal linking sufficient for discovery and distribution of authority.

- Signs of quality: author, date, clear structure, absence of massive duplicity.

This doesn't “optimize for AI”; it just avoids being left out.

When to limit previews (and why it can be expensive)

Limiting previews can make sense in cases such as:

- Really content Premium or for a fee,

- policies or documents where you prefer strict control of fragments,

- sensitive content with a risk of interpretation out of context.

The potential cost:

- less extractability (less likely to be support),

- less attractive in SERP,

- drop in CTR or visibility in certain formats.

It's not a “hack”. It's an editorial decision: protect value vs maximize discovery.

Authority and brand: AI focuses attention, so branding weighs more

When the SERP summarizes, many users decide with quick signals: recognizable source, consistency and reputation. That may focus attention on strong brands, but it doesn't mean it's impossible to compete. It means that you must build cumulative “tests”: clear entity, specialization, and assets that are not interchangeable.

In terms of SEO, it's not just classic link building. It's a mix of:

- mentions and reputation,

- author profiles and editorial responsibility,

- own assets (data, tools, studies),

- thematic coherence (well-defined clusters).

From backlinks to “evidence of authority”: mentions, author profiles and unique assets

Asset ideas that tend to generate stronger signals than “one more item”:

- SERP studies by vertical (with methodology and dates).

- Datasets own (even if they are small, if they are useful and up to date).

- Free tools (calculators, checkers, templates).

- Glossaries and decision frameworks that others cite.

- Templates for reporting, auditing or prioritization.

The logic is simple: if the asset is useful and unique, it has more options to be quoted, linked and remembered.

If your website is YMYL (health/finance): expert review and explicit limits

Although this post is about SEO, many readers work in YMYL sectors. In those cases, the standard of accuracy and accountability rises:

- Expert review where applicable (medical, legal, financial).

- Clear and up-to-date sources, without extrapolating conclusions.

- Prudent language: do not promise results, do not recommend sensitive actions without a qualified professional.

- Explicit limits: What is general information and what requires professional evaluation.

Applying a “fast” approach in YMYL can be costly in trust, reputation, and risk.

Measurement in 2026: What to look at when AI features are mixed into data

If AI features are integrated into the experience, measurement must integrate context. And, according to Google, the activity related to these experiences is reflected in global Search Console data, requiring Inferring impact with segmentation and annotations, rather than waiting for a perfect report.

In practice, a hybrid approach works:

- GSC for demand (impressions, clicks, CTR) per page and query.

- Annotations of changes (content, template, internal linking, deployments).

- SERP feature tracking (observation of the appearance of modules by priority clusters).

- Conversions and lead quality to avoid “SEO-only” conclusions.

Analysis framework: per cluster → intent → per page (not just per keyword)

Recommended segmentations:

- Informational vs transactional (and within: definitions vs comparative vs implementation).

- Branded vs non-branded (brand cushions impacts and improves CTR in some contexts).

- How-to vs comparison vs decision (they have different click behaviors).

- Top URLs by cluster (to see cannibalization and dispersion).

This reduces noise: a keyword can go down, but the cluster can be maintained or even improved in business.

Typical impact indicators: stable impressions + low CTR + high/low conversion

Common patterns (to be investigated, not “rules”):

- Stable impressions + low CTR: Demand exists, but the SERP absorbs part of the click.

What I would do: convert blocks to “citable + useful”, add criteria, examples and assets; check if the real intention changed. - Impressions go down + stable CTR: loss of presence or change of query mix.

What I would do: review coverage by subtopic, competition, cannibalization and freshness. - Clicks go down + conversion goes up: less volume, more qualification.

What it would do: protect and extend MOFU/BOFU, improve internal routes to decision pages. - Same clicks + low conversion: less aligned traffic or content doesn't resolve.

What I would do: adjust intent, improve clarity, add criteria and steps, review UX.

Playbook by profile: nichero, consultor/agency, SEO manager

For nichers: less volume, more utility and assets that cannot be “summarized”

Priorities (3—5):

- Consolidate by Clusters: one strong URL per topic, not 10 weak ones.

- Reconverting TOFU: from “definition” to Guide with judgment.

- Create A unique asset per cluster (checklist, template, decision table).

- Update top URLs with a light routine (monthly or bimonthly).

- Avoid scaling thin content even if it's “cheap” with AI.

Metrics to monitor:

- CTR and clicks by page/cluster (not just by keyword).

- Conversion or microconversion (subscription, lead, click to money page) through internal routes.

For consultants/agencies: content product + reporting focused on business

Priorities (3—5):

- Provide change-oriented auditing of the SERP: inventory of queries, intent and risks.

- Roadmap by intention (TOFU/MOFU/BOFU) with a clear backlog of improvements.

- “Savable” deliverables: checklists, templates, prioritization matrix.

- Reporting focusing on pages and clusters that impact business, not just rankings.

- Editorial process: verification of claims, examples, updating and governance.

Metrics to monitor:

- Cluster evolution (impressions/clicks/CTR) + assisted conversions.

- Cannibalization and dispersion of impressions between URLs of the same topic.

For managers: governance, quality control and the advantage of own data

Priorities (3—5):

- AI Use Policy: What's Allowed, Required Review, What's Prohibited

- Cluster ownership: managers, maintenance schedule and objectives by intention.

- Editorial and technical QA before indexing (avoid debt).

- Asset generation: studies, datasets, internal/publishable tools.

- Annotation and learning system: changes → measurement → decision.

Metrics to monitor:

- Performance by intent and funnel stage (not just “total traffic”).

- Signs of trust: mentions, authorship, engagement, qualitative feedback.

How to use Makeit Tool to adapt faster

A SEMRush type suite like Makeit Tool it's useful if you use it as work system, not like a “vanity panel”. In an environment with more dynamic SERPs, it usually provides value for: researching demand, prioritizing actions, monitoring changes and benchmarking competitors.

Detect SERP changes and opportunities by intent (not intuition)

Operating flow:

- List queries and key pages (those that already bring business or potential).

- Group by Intent (definition, comparison, implementation, decision).

- Prioritize by potential (demand, conversion value, gap against competition).

- Map to URLs (one main URL per intention/cluster) and detect gaps.

The key is to avoid the “more keywords = more URLs” trap: in 2026 it usually works better improve coverage and utility within clusters.

Monitor competitors: what they publish, what they update and where they outperform you in “usefulness”

So that the analysis doesn't stop at “they rank more”, review patterns:

- Structure: Do they respond in layers and with citable blocks?

- Examples: do they show tests, captures, criteria, cases?

- Assets: do they have tables, templates, tools?

- Freshness: Do they update with actual dates and changes?

- Coverage: Do they cover sub-topics that your piece ignores?

From there comes an actionable backlog: not “write more”, but “improve X block, add Y criteria, create Z asset”.

Common Mistakes When “Involving AI” in SEO (and How to Avoid Them)

Common (and avoidable) errors when introducing AI into the workflow:

- Publish content generic which does not add criteria.

- Include Invented claims (statistics, “trends”, causalities).

- Creating too many URLs and generating cannibalization.

- Promotional or “optimized to sound” tone, not to help.

- Don't update: let pieces that were top die.

- Measure only by keyword: drawing erroneous conclusions by noise.

AI accelerates both good and bad. If the publishing system doesn't exist, the risk grows with volume.

Publishing more is not a strategy: when consolidating beats expanding

Consolidating usually wins when:

- You have multiple URLs competing for the same intent.

- The prints are scattered and the CTR is low.

- The content is similar (variants per keyword with no real value).

- There are “thin” pages that don't justify indexing.

A practical guideline: it's more cost-effective improve 10 key URLs (utility, structure, examples, linking) that create 100 new pages without differentiation.

Using AI without verification: the cost of a single “hallucination” in reputation

A single false statement can damage trust, generate negative links, or force you to rework the entire cluster. Minimum verification checklist:

- Does the data have source and date?

- Is the statement conditional (“depends on...”) when appropriate?

- Are there explicit limits and no promises?

- Is the example real (or clearly illustrative) and not misleading?

- Does a human reviewer understand and validate it?

In SEO, trust is a cumulative asset; losing it often costs more than gaining it.

FAQs about the impact of AI on SEO

Is AI going to “kill” SEO or is it just transforming it?

It's transforming it. AI changes the SERP, reduces clicks on simple informational queries and raises the standard of utility and trust, but SEO is still key to being discovered, being cited as support and capturing clicks when the user needs depth. Adaptation involves intention, clusters and demonstrable quality.

Do you need to optimize differently for AI Overviews or AI Mode?

Google has indicated that there are no “special” requirements other than doing basic SEO well: crawlable and indexable content, useful, clear and reliable. In practice, it helps to write citable blocks (direct answers + development), maintain semantic consistency and provide evidence, not as a “hack”, but as good editorial quality.

Does Google penalize content made with AI?

Not necessarily for using AI, but for publishing worthless scaled content, spam, or unreliable information. AI can be an accelerator if there is editorial control: data verification, nuances, own examples and human review. The real risk is usually in volume + generic + lack of verification.

What types of content become more valuable with AI in the SERP?

Content that helps to decide and execute tends to gain value: comparisons with criteria and trade-offs, implementation guides, templates and checklists, own examples and unique assets (studies, datasets, tools). They are pieces that are not completely consumed in a summary and provide confidence and practical utility.

How can I measure the impact of AI features if Search Console mixes everything up?

Some of the performance associated with these experiences is reflected in global Search Console data, so it should be measured with segmentation: by cluster, intent and page. Add annotations of changes, observe the presence of features in key SERPs and cross with conversions. The objective is to detect patterns (CTR falls, conversion changes) and decide actions.

What do I do tomorrow if I'm a Nichero and I don't have a team?

Three steps: (1) audit your 10 URLs with the most impressions and detect “generic” content or cannibalization, (2) rewrite key sections in a citable format (direct answer + criteria + example) and add an asset (checklist/table), (3) it measures 2—4 weeks per page/cluster: impressions, CTR and a microconversion (internal click, lead, subscription).

Does it make sense to create content just to “get quoted” and not for clicks?

Yes, if you integrate it into a strategy: being cited can reinforce brand and authority, but it must be connected with internal routes to pages that solve the complete need. It is advisable to design citable blocks within pieces that also provide depth, examples and steps, to convert when the user needs to go beyond the summary.

How do I avoid cannibalization if I start to cover more sub-topics because of the “fan-out”?

Design clusters with a primary URL by intention and use internal sections and linking to cover subtopics, instead of creating many almost the same URLs. If you need several pages, clearly differentiate their purpose (definition vs comparison vs implementation) and check in GSC if the impressions are dispersed between URLs of the same topic.

analyze backlinks for free

UP TO 23 DATA PER LINK

Take advantage of all the resources we offer you to build an enriching link profile.